Peer-to-Peer Technology for Faster Larger File Transfers

Early Internet and P2P Technology

The early Internet was predominantly a peer to peer system. It was a network populated with academics and researchers, and computers connected to this network were largely equal in that each contributed as much information as they received. Transferring large files fast was not a consideration during this early stage of peer to peer connection.

As the Internet matured, the client - server model came to dominate, especially with the advent of http and the World Wide Web. In this system, roles were separated with consumers as “clients” connecting to “servers” somewhere on the network that would distribute content and data. Serving a massive audience required a vast number of these servers. It was during this time that large file transfer became an inherent issue for this model.

As demand grows in this model, performance declines and fragility increases. The same number of servers must not only transfer large files faster but must meet the needs of a larger number of clients. Sharing the growing load naturally degrades the performance available to each client. Additionally, such systems are inherently fragile. With a single source of content at the servers, you introduce single points of failure that can result in complete downtime for an application.

Thus, technologies like Content Delivery Networks (CDNs) emerged to aggregate and multiplex server capacity across many sources of content to help with fast large file sharing. An unpredictable burst of demand could be more easily shared and building capacity closer to clients improves performance. Such innovations made the early client - server model a little more robust, but at considerable cost to large filing sharing.

Yet despite the inefficiency, the client server model remains predominant today. Examples of the client - server model in common use include most web content, search engines, cloud computing applications and even common tools like FTP and rsync.

Peer to Peer is the Fastest Way to Transfer Files

Peer to peer systems are fundamentally different and are the fastest way to transfer files. In P2P file transfer systems, every “consumer” is also a “producer.” Using the language of the client server model, each participant is both “client” and “server”. In this way peer to peer systems become organically scalable in addition to transferring large files fast. As more demand emerges for any content, so does more supply. As demand grows, the P2P connection system becomes incredibly fault tolerant and actually gets faster for large files, in sharp contrast to the client server model, which gets noticeably slower, and more fragile under the same circumstances.

To summarize this section, peer to peer has several advantages:

It is faster

It is more robust

It reduces the load on the server

It’s an efficient use of infrastructure

Peer to Peer Large File Transfer in Action

Using a specific example, this section examines how peer to peer is always faster for large file transfers than any client-server architecture. To explain the technology in a simple way, we’ll make the following assumptions. The file we want to transfer with a P2P connection has five blocks. Each computer has a connection channel capable of sending one block per cycle.

We have a Sender that needs to send data to four devices A, B, C, and D (the Receivers).

Stage 1 - Splitting the file:

The Sender splits the file into independent pieces, and creates a meta-information data block that describes the pieces . In our example, the file consists of 5 pieces.We will mark each piece as colored dot: Red, Green, Yellow, Blue & Black.

Stage 2 - Receiving the file:

The Receivers request the file meta-information. When Receivers get the meta-information, they know the file in question contains five pieces, and at the moment only the Sender has them.

Stage 3- Reading the file:

Each cycle, the Receivers randomly select one piece from the file and start downloading it. This continues until every Receiver has every piece of the file.

So, How Many Cycles Would it Take to Transfer The Data?

It takes ~3 times longer using client-server architecture. The Receivers will download pieces only from the Sender. In the P2P file transfer example, most of the pieces are coming from other Receivers. Let's consider a real world example to demonstrate the differences.

Using P2P architecture: only 7 cycles

Using Client-Server architecture: 20 cycles

Real World Example of Fast File Transfer & P2P

Let's say we have a server and ten clients that are all connected over a 1 Gbps network. We need to send a 100GB file to all the machines. A peer to peer solution will complete large file transfers in 17 minutes. By contrast, the client-server approach will take more than an hour. As you increase demand on this P2P speed system (either with larger files or more participants) the performance difference between these two models will grow.

Conclusions on P2P & Fast File Share

Speed and robustness are critical to modern business. For almost any data distribution task, a peer-to-peer solution will always be faster for file transfers than a client-server (point-to-point) one. The differences are material when data size and business scale (the number of locations or endpoints) grow large.

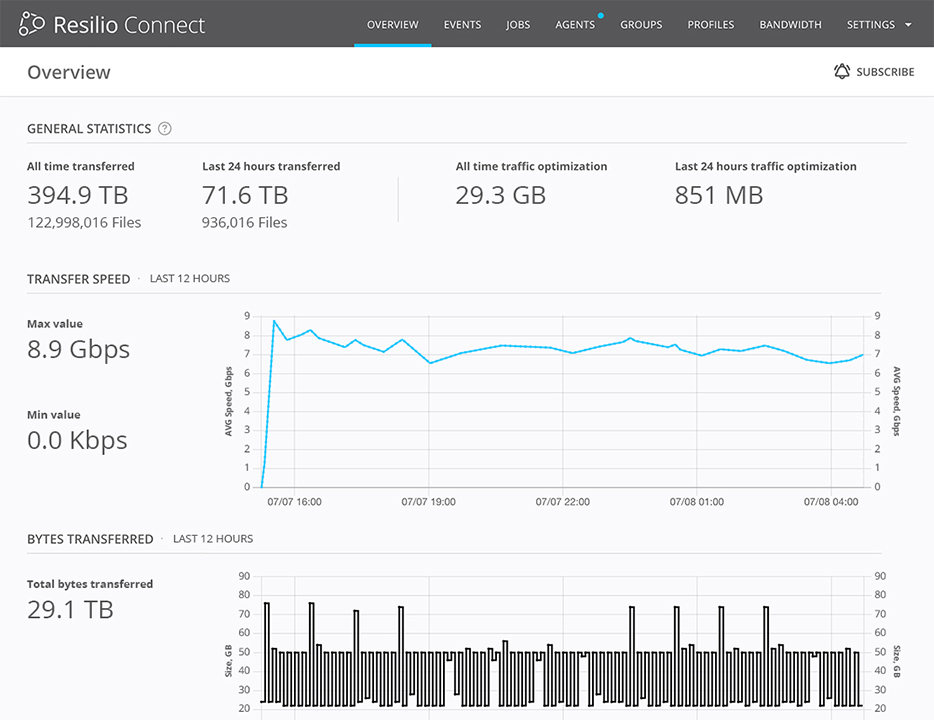

Resilio further employs rsync-like delta encoding and WAN-optimized UDP-based transport protocols to deliver the most efficient utilization and fastest speeds over any network and any configuration.

Resilio’s technology offers the fastest delivery time across a broad array of network speeds common to the modern Enterprise. Our products combine peer to peer, rsync-like delta encoding, and WAN Optimization, three of the most powerful data transmission technologies, into one single solution.

If you need to synchronize data across servers, distribute data to several locations and endpoints and/or need to deliver data over unreliable networks, please contact us using the form below for access to the world’s fastest data transfer solution.