Server replication software is a critical component of the IT toolbox for large, distributed enterprises. Some server vendors package in proprietary sync solutions that work in simple, homogeneous environments. But often companies run into challenges trying to sync between multiple vendors or hybrid architectures (or they simply want better performance).

In our experience, companies often seek new server replication software for one of four reasons:

- Speed: The current solution doesn’t sync or transfer fast enough for their workflow.

- Reliability: IT teams are wasting too much time trying to fix bugs and breakdowns with the current solution, with little help from customer support.

- Large Files: Teams need a solution that can better handle large files and/or large quantities of files.

- Growing Infrastructure: They may be adding new hardware or migrating from on-prem hardware to the cloud, from the cloud to on-prem, or expanding their operation.

To help companies find a server replication solution that’s right for them, we put together this guide discussing the factors you should consider when seeking an SRS solution (speed and scale, reliability, security, visibility, and compatibility with your specific use cases).

Our guide is followed by a review of seven of the most popular server replication solutions on the market. We provide a deep dive into our own server replication software, Resilio Connect, and describe how it seeks to fulfill the criteria above.

If you want to learn more about Resilio Platform for your team, schedule a demo here.

5 Factors to Consider When Choosing Server Replication Software

To help you narrow down your list and focus on the right solutions, look for server replication software that meets these five criteria:

- Speed and Scale

- Reliability and Fault Tolerance

- Security

- Centralized Management and Visibility

- Use Cases

1. Speed and Scale

In our experience, speed and scale are two of the biggest issues that have historically driven customers away from their old server replication software and to us.

So, when analyzing a server replication solution, consider:

- Transfer speed: Your solution must transfer files in an acceptable time frame so it doesn’t become a bottleneck in your workflow. Think about the typical file sizes you’ll need to replicate, the number of servers you need to replicate between, and the time frame requirements for your end users (in order to not be significantly hampered or slowed down by waiting for file transfers and replication). If something close to “real-time” synchronization is a must, for example, then you need a tool that can detect changes and start transfers immediately.

- Scale: In concert with speed, if you’re working with large files, a large number of files, or synchronization between many servers, you’ll need a solution that can meet the scale of your requirements without breaking down (and at acceptable speeds).

- Quick deploy: You should be able to quickly and painlessly migrate to your new SRS tool, with as little downtime and interruption as possible. Resilio Connect, for example, can be deployed with your existing infrastructure in as little as two hours.

2. Reliability and Fault Tolerance

Customers have also told us that one of their biggest frustrations with replication tools is reliability. Anyone who’s worked with DFSR knows how often it breaks down and how much time is wasted on error-resolution (in fact, we’ve written multiple articles on DFSR errors and alternatives).

Look for a server replication software solution that:

- Always sends all files to their appropriate destination

- Transfers files without errors or corruption

- Recovers from transfer and link failure/slowdown quickly and effectively

- Has customer support that responds quickly

3. Security

Between cybersecurity threats and the need to protect sensitive data, high security is a must for any server replication software. Your solution must:

- Ensure no one outside your company can access your files

- Ensure that your files are only transferred to approved destinations

- Enable you to operate in an air-gapped environment, if needed

- Encrypt all data in transit

4. Centralized Management and Visibility

Many of our customers came to us complaining about a lack of visibility with DFSR and similar server replication tools. In other words, they don’t have a centralized location where you can see metrics, build reports, see error logs, and control details like bandwidth usage and schedule.

In contrast, having a centralized management UI allows teams to:

- Spot and resolve issues using real-time insight into the status and performance of server replication.

- Control costs by measuring and adapting to resource usage.

5. Use Cases

Finally, beyond the four fundamental requirements above, you also should make sure your replication tool can adapt to the specific scenarios and use cases your organization requires. This includes:

- Network reliability: If you need to perform data transfers in or to remote locations or over bad networks, you need a solution that can still deliver in areas with questionable network access.

- Configurability: In order to meet your needs and optimize performance, you should be able to configure parameters such as bandwidth usage, scripts, transfer triggers, etc. You should also be able to granularly control your settings and set things like buffer sizes, disk io threads, packet size, etc.

Below, we discuss 7 server replication software solutions, starting with an in depth discussion of our own — Resilio Platform — and how we’ve gone about trying to fulfill the five factors above.

Resilio Connect: Fast, Reliable, P2P-based Server Replication

Resilio Platform is a high speed, scalable, P2P solution for syncing and replicating data between servers in real-time.

Connect is used by leading companies in media (Turner Sports, Skywalker Sound, etc.), tech and video games (Larian Studios, Electronic Arts, etc.), retail/healthcare/engineering (Mercedes Benz, YUM!, Buckeye Power Sales, etc.), and maritime remote operations (Northern Marine Group, Linblad Expeditions, and more).

What differentiates Resilio from many other server replication tools is its unique P2P file sharing architecture and WAN acceleration technology that give Resilio some of the highest transfer speeds (10+ Gbps) that scales easily whether you’re replicating data between 2 servers or 200. Let’s look at why in detail.

Speed and Scale: How Resilio Achieves the Highest Replication Speeds in the Industry

Resilio’s speed and scalability are primarily the result of two features: Our P2P architecture and WAN optimization.

P2P Architecture

Most server replication tools work with a client-server architecture — a model where one device is designated as the Server and all others as Clients. In this setup, the Server provides the Clients with data and can receive data from the Clients. The Clients, however, can only send or receive data to/from the Server.

Because the Client computers have limited privileges, replication tools that use the client-server model have to rely on “cloud-hopping”: In order to transfer data from Client A to Client B, the data must first transfer to the Server.

But Resilio’s P2P architecture is omnidirectional. Every computer is equally privileged, enabling it to overcome the limits of cloud-hopping and deliver:

1. Faster Transfer Speed

In a P2P setup, every device can send data directly to any other device. And when moving data to/from several devices, each receiver can also become a sender — resulting in transfer speeds that are 3 – 10x faster than any client-server solution.

2. Higher Availability

In the client-server model, the entire infrastructure is dependent upon the Server computer, which must be online at all times in order for things to work. But issues such as operating system errors, network disruption, hardware failures, and more can bring the Server down or impact its availability.

In a P2P model, however, any available device can provide the necessary data or service to any other device — so you’re never dependent on just one machine and your system is always available.

3. Organic Scalability

In a client-server model, scaling up your infrastructure to handle larger demand (i.e., more data, more users, and/or more devices) is complex and costly. For example, if you have more Clients than a single Server can serve, you’ll need to deploy another Server and design a system that balances the load between them. In addition, you simply add more “cloud hopping” slowdowns as you add more clients: Each change on all the clients needs to first go to the server before being pushed to the other clients.

But a P2P architecture is organically scalable. Every device can share data with every other device. It’s a mesh network. So the more devices you add, the more “servers” you are effectively adding. This dramatically speeds up the transfer process instead of slowing it down: New data on client A can be sent to client B, C, D, etc. and each of them can now send that data to a bunch of other clients, and so on.

In other words, more demand also inherently creates more supply — making P2P solutions perfect for applications that involve large data and/or many users.

Further reading: You can read more about the benefits of P2P file collaboration here.

WAN Acceleration

While P2P architecture optimizes the structure of data transfer, enabling each device to act as a server and communicate with any other device in your network, WAN acceleration optimizes how and when data gets transferred in order to maximize transfer speed, security, and reliability.

Resilio Platform optimizes WAN transfer better than any other solution available using:

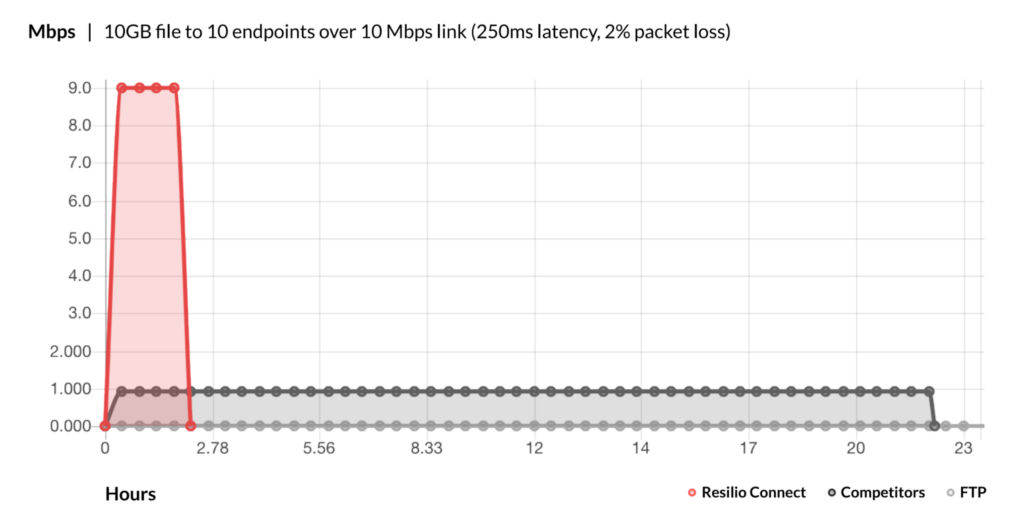

1. Parallel Transfer

Other WAN optimized server replication solutions (e.g., Aspera, Signiant, UDT, etc.) are designed for the client-server model and can only optimize data transfer point-to-point. But with Resilio’s P2P N-way sync architecture, files can be transferred and replicated across the entire network of devices, rather than just point-to-point.

In other words:

- Files are split into several blocks that independently transfer to multiple destinations.

- The destinations can exchange blocks between themselves independently from the sender.

This dramatically increases the speed of data delivery by leveraging internet channels across all locations. And the transfer speed increases as more endpoints are added. Resilio Platform will be 50% faster than one-to-one solutions in a 1:2 transfer scenario and 500% faster in a 1:10 scenario.

2. Resilio’s Proprietary Transfer Protocol: ZGT

Resilio Platform optimizes file transfer even more using our proprietary transfer protocol: Zero Gravity Transport ™ (ZGT).

Traditional transfer protocols weren’t designed for modern WANs, and are hindered by high packet transmission time and packet loss. ZGT ™ minimizes the impact of packet loss and high latency and maximizes transfer speed across any network using:

- A bulk transfer strategy: To create a uniform packet distribution in time, the sender sends packets periodically with a fixed packet delay.

- Interval acknowledgement: It uses interval acknowledgment for a group of packets with additional information about lost packets.

- Congestion control: It uses a congestion control algorithm to calculate the ideal send rate, and it periodically probes the RTT (Round Trip Time) to inform the algorithm and maintain an optimal sending rate.

- Delayed retransmission: It retransmits lost packets once per RTT to decrease unnecessary retransmissions.

Further reading: For more information on how Resilio optimizes WAN transfer, check out our WAN optimization whitepaper.

The combination of P2P architecture and WAN acceleration enables Resilio to provide high-performance transfer regardless of network conditions, enabling enterprise organizations to:

- Reduce costs: Better WAN performance enables organizations to eliminate expensive hardware, streamline infrastructure, and simplify administration.

- Achieve faster network traffic: Incredible volumes of data can be moved across the WAN without causing network congestion and hindering network performance, while also avoiding data loss and unwanted packet retransmission.

- Improve productivity: WAN optimization can be tailored for SaaS solutions and cloud platforms (like Salesforce, AWS, and Azure), so IT and end-users can perform their jobs more efficiently.

Resilio’s flexible BYO storage means it can be deployed instantly with your existing infrastructure (on-prem, edge, or cloud). As a vendor-agnostic solution, you can have Resilio Platform up, running, and syncing in as little as 2 hours.

Try our transfer speed calculator to see how much time we can save for you.

High Reliability & Fault Tolerance

Resilio is also the most resilient server synchronization solution available.

Resilio’s code is reliable and well-tested, with a codebase that’s been around for 10+ years and installed on more than 10M devices of all types. And it uses simple data structures that are corruption-proof to keep an index of the file system.

In addition to speed, our P2P architecture and WAN Optimization provide:

- Persistency: Mesh networking means there’s no single point of failure. Resilio can dynamically route around outages with connection loss and retry transfers until they’re complete.

- Faster recovery: Omnidirectional transfer and the ability to fully utilize any network connectivity enable Resilio to meet sub-five-second RPOs (Recovery Point Objectives) and RTOs (Recovery Time Objectives) within minutes of an outage, making it the ideal solution for quick disaster recovery.

- Resiliency: Because Resilio uses file chunking (i.e., transferring files in small chunks), it can perform a “checksum restart” in the event of a network failure mid-transfer. In other words, it will simply resume the transfer where it left off (unlike other tools which must restart the transfer from the beginning).

- Reliable replication: With cryptographic file validation, Resilio never creates corrupted files.

Finally, one of the most common complaints of our customers about their previous server replication software solutions is the lack of customer support. That’s why we’ve made having reliable, always available and fast customer support a priority. We provide 24×7 support and will respond to major issues within 2 hours or less.

Security: End-to-End Encryption

Resilio provides state-of-the-art data security that was reviewed and verified by 3rd party security experts.

Securely transfer files over end-to-end encrypted connections using:

- Mutual authentication: Data is only delivered to designated endpoints.

- In-transit encryption: All files are encrypted in transit using AES 256, so data can’t be intercepted or hacked.

- Cryptographic data integrity validation: Resilio’s integrity validation process ensures that data remains intact and uncorrupted.

- Forward secrecy: One-time session encryption keys protect sensitive data.

- True privacy: While other solutions keep your files private, they store login, usage, and access info on public servers. Resilio keeps ALL of your data private.

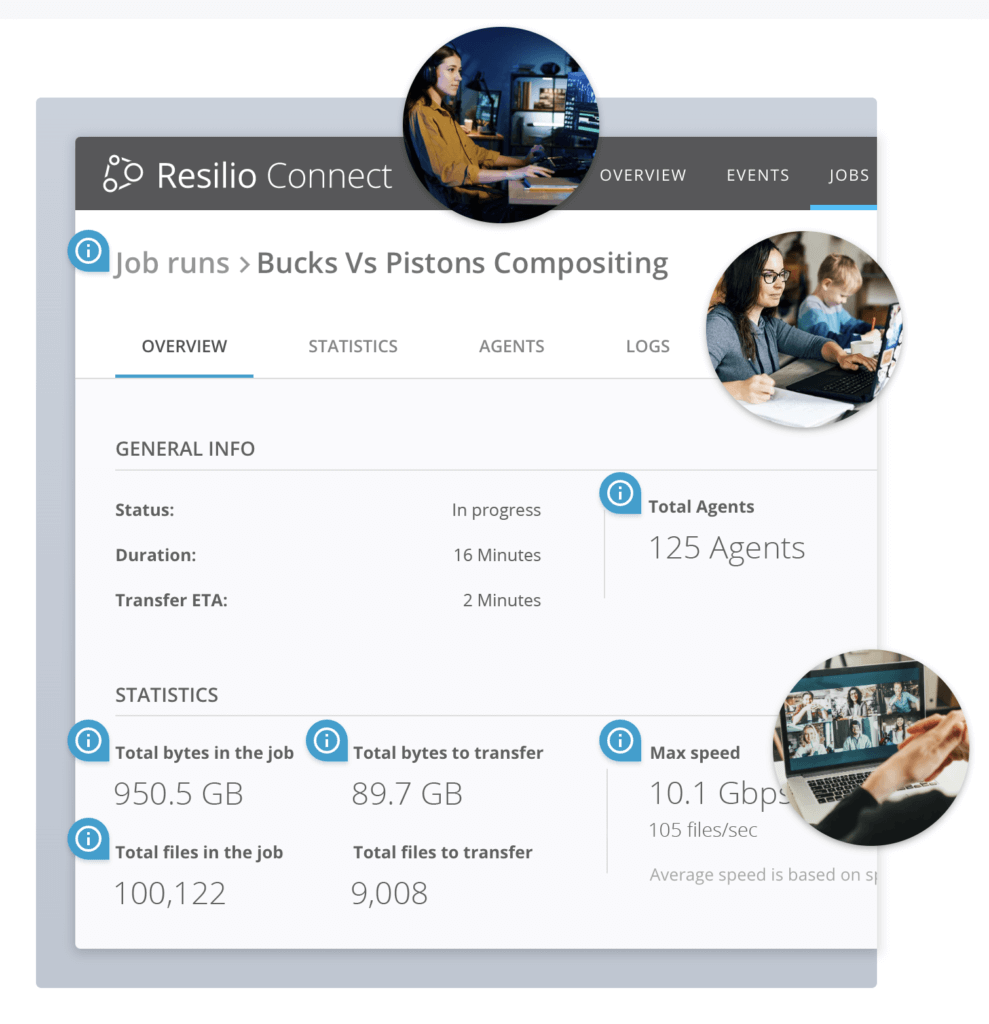

Centrally Managed & Highly Visible

Other tools (especially DFSR) leave you in the dark about the status of your system. But Resilio’s dashboard, real-time notifications, and detailed logs provide you with clear insight into the state of replication in your network.

Resilio also enables you to adapt key parameters like:

- Bandwidth control: You can set bandwidth usage limits based on the time-of-day or day-of-week to make for more efficient enterprise file sharing. And you can create different schedule profiles for different jobs and agent groups.

- Network stack: You can adjust buffer size, packet size, and more to optimize performance.

- Storage stack: You can adjust disk io threads, data hashing, and file priorities to meet your needs.

- Functionality: Using our powerful REST API, you can script any Resilio functionality, manage agents, create groups, control jobs, or report on data transfers in real-time.

Resilio’s configurability empowers you to control costs and resource usage, optimize performance, and spot/resolve any issues.

If you want to see if Resilio Platform could be a fit for you, schedule a demo or learn more here.

Other Server Replication Software

PeerGFS

PeerGFS provides real-time, data replication to improve productivity for enterprise organizations. It can be easily deployed in multi-site, multi-platform, and hybrid multi-cloud environments.

Key Features of PeerGFS

- Speed: Only replicates changed file data.

- Visibility: Provides a configurable management center for monitoring the status of replication in your enterprise.

- Security: Uses Malicious Event Detection to monitor file activity for suspicious behaviors and patterns.

SureSync

SureSync provides real-time file replication and high data availability between windows servers on a LAN or across a WAN. It uses a multi-threaded copy engine to enhance performance transmits using TCP/IP transfer protocol.

Key Features of SureSync

- Speed: Uses remote differential compression to only copy the changed portions of a file.

- Visibility: Enterprise Status panel provides a bird’s eye view of all replication and sync tasks.

- Security: Detailed security mechanism allows you to control which users have access to certain SureSync jobs.

- Reliability: Maintains data integrity by retrying operations at the block, file, and job level in the event of a network or file system error.

GoodSync

GoodSync provides free, real-time data backup and synchronization for Windows and Linux servers, NAS, and mobile devices.

Key Features of GoodSync

- Speed: Run syncs in parallel threads.

- Visibility: Provides logs of all file changes and a change report for tracking and analysis.

- Security: Uses AES-256 to encrypt data at rest and in transit.

- Reliability: Uses a sophisticated algorithm and bit-by-bit synchronization to ensure accuracy and protect against data loss.

DFSR

DFSR (Distributed File System Replication) is a role service for Windows servers that enables organizations to replicate folders across multiple servers and sites. While it’s free, DFSR suffers from reliability, visibility, and performance issues.

Key Features of DFSR

- Speed: Uses remote differential compression to replicate only changed file blocks.

- Reliability: DFSR transfer is often unreliable. It may report that all files were successfully transferred to their destination when, in fact, they were not.

- Security: Uses authenticated and encrypted remote procedures when replicating data.

- Visibility: As we stated earlier, DFSR’s lack of visibility and centralized management drive many users to seek other solutions.

Rsync

Rsync is a free command-line software utility for Linux and Unix systems that can be used to replicate data between local and remote servers. While it provides reliable transfer, it suffers from certain limitations (such as not copying open files).

Key Features of Rsync

- Speed: Uses a remote update protocol to speed up file transfer.

- Visibility: The “-progress” option can be used to see the status of transfers currently in progress. However, as a command-line software utility, it doesn’t provide a user-friendly interface.

- Security: No native encryption provided, leaving your data vulnerable in transit.

- Reliability: Performs end-to-end checks to ensure all files arrive at their destination.

Robocopy

Robust File Copy, or Robocopy, is a command-line software utility from Microsoft that can be used to replicate large data on Windows servers.

Key Features of Robocopy

- Speed: Multithreaded copying for faster file transfer.

- Visibility: Monitor current transfers with command-line progress indicator. However, as a command-line software utility, it doesn’t provide a user-friendly interface.

- Security: No native encryption provided, leaving your data vulnerable in transit.

- Reliability: When a mid-transfer network interruption occurs, it marks the progress of file transfer so it can pick up where it left off.

If you want to learn more about Resilio Platform for your team, schedule a demo here.