S3 Replication is a useful feature for companies looking to replicate objects and their metadata across their Amazon Web Services (AWS) infrastructure.

But while S3 Replication is relatively simple to set up, it’s often difficult to calculate how much replication will cost in advance. Because of AWS’ sheer complexity, many variables impact the price of replication, including:

- The number of replication PUT requests you’re making.

- The storage class you choose for the destination bucket.

- The regions where the destination and source buckets are located when you’re doing cross-region replication (CRR), and more.

In this guide, we explain how to calculate your S3 Replication costs based on the five key factors that make up the majority of replication charges.

But before we dive in, it’s worth noting that S3 Replication has some downsides beyond the difficulty to estimate how much replication will cost. Specifically, it can be slow, unreliable, time-consuming to debug, and expensive, especially when moving data across AWS regions.

That’s why, in the second part of this guide, we’ll show how to overcome these S3 Replication issues with Resilio Connect — our real-time sync and replication solution that can help you replicate data across any AWS region or service.

Specifically, you’ll learn how you can use Resilio Platform to:

- Achieve faster and more reliable replication on AWS.

- Easily manage the entire replication process from a single place.

- Reduce unnecessary data movements and minimize replication costs.

- Avoid vendor lock-in by replicating data across multiple AWS regions, services, cloud providers, and even on-prem storage.

For more details on how Resilio Platform can help your organization, schedule a demo with our team.

5 Factors to Consider When Calculating S3 Replication Costs

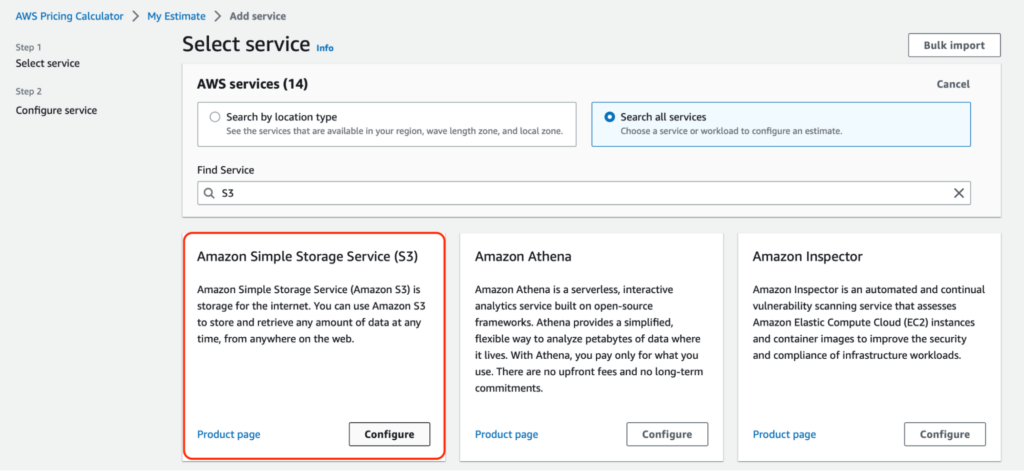

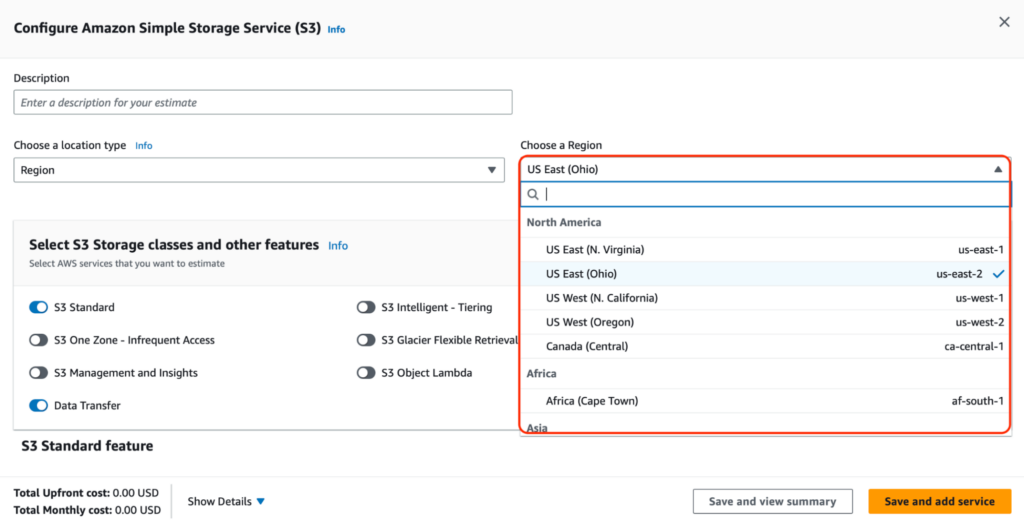

Note that you can use the AWS pricing calculator while reading this guide to start estimating your specific S3 replication costs. Just open the calculator, find Amazon Simple Storage Service (S3), and click on “Configure”.

From here, you can input all relevant variables, like storage classes, the number of PUT requests, and the regions where data transfers will take place.

1. S3 Storage Costs

AWS charges you for storing objects in S3 buckets. This means you owe standard storage costs for the replicated S3 objects in the destination bucket.

These storage charges vary widely depending on each bucket’s region and the Amazon S3 storage class you’ve chosen. For example, here’s the S3 pricing for the most popular classes in the US West Region (Northern California):

- S3 Standard Storage, which is a general-purpose object storage solution suitable for frequently accessed data. Storing up to 50 TB of data per month in this class costs $0.026 per GB at the time of writing.

- S3 Standard: Infrequent Access Storage, which is a great data storage solution for objects that won’t be accessed frequently but have to be retrieved in milliseconds. This class costs $0.0144 per GB.

- S3 One Zone: Infrequent Access Storage, which is similar to the previous class but more suitable for re-creatable infrequently accessed data. With this option, your data is only stored in one Availability Zone, which is why the price is lower compared to the previous one at $0.0115 per GB.

- S3 Intelligent Tiering, which automatically moves your objects between different tiers based on your access patterns. With this class, you pay monitoring and automation charges ($0.0025 per 1,000 objects in US West) and different storage charges depending on the tier your S3 data is stored in. For example, if you have 100 TB in the Frequent Access Tier, you’ll be charged $0.025 per GB.

- S3 Glacier Instant Retrieval, which is a low-cost storage class that provides millisecond data retrieval for objects accessed once per quarter. It costs $0.005 per GB.

- S3 Glacier Deep Archive, which is a low-cost archive storage solution for objects accessed once or twice per year with retrieval in hours. It’s by far the cheapest option at $0.002 per GB.

- S3 Glacier Flexible Retrieval, which combines aspects of the previous two classes by being a long-term, low-cost storage option with retrieval options from minutes to hours. It costs $0.0045 per GB.

Again, these prices vary by region, so make sure to choose the region of your destination bucket when calculating storage costs.

You can get a more in-depth storage class analysis on this AWS S3 product page.

2. Replication PUT Requests

Besides storage charges, S3 also requires you to pay for requests made against your buckets and objects.

When it comes to replication, you need to consider your replication PUT requests. The costs for these requests differ depending on the region and S3 storage class. For example, 1000 PUT requests in the US East Region (Ohio) at the time of writing cost:

- $0.005 for the S3 Standard and Intelligent Tiering classes.

- $0.01 for the S3 Standard: Infrequent Access and S3 One Zone: Infrequent Access classes.

- $0.02 for the S3 Glacier Instant Retrieval Class.

- $0.05 for the S3 Glacier Deep Archive Class.

- $0.03 for the S3 Glacier Flexible Retrieval Class.

3. Data Transfer Charges

When replicating data across different regions, you pay for the inter-region data transfer out from S3 to each destination region, e.g., from the US East Region to the US West Region.

Note: Transferring data between S3 buckets in the same region (and out to Amazon CloudFront) is free, so you don’t need to worry about this when doing same-region replication (SRR).

For example, moving data out of most US, Canada, and Europe regions into other AWS regions costs between $0.01-$0.02 per GB. However, prices are much steeper for regions in Asia and the Middle East, ranging from $0.085 to $0.010 and in some cases — even $0.014.

Data transfer charges are among the most complex and overlooked aspects of AWS, so we highly recommend checking out our detailed guide to AWS cross-region data transfer costs.

4. Replication Time Control and S3 Replication Metrics

S3 Replication Time Control (S3 RTC) helps companies meet compliance requirements by ensuring low latency replication, with 99.99% of objects being replicated within 15 minutes.

By default, S3 RTC includes S3 Replication Metrics, which help you monitor the replication process — the total number and size of objects awaiting replication, maximum replication times, and so on.

While useful, both S3 RTC and S3 Replication Metrics come with separate charges:

- S3 RTC incurs a data transfer fee of $0.015 per GB. This price is the same for all AWS regions.

- S3 Replication Metrics are billed at the same rate as Amazon CloudWatch Custom metrics. You can use this CloudWatch pricing calculator to calculate the costs depending on the number of APE requests and other factors.

5. S3 Batch Replication Charges

If you’re using S3 Batch Replication to replicate existing objects or ones that previously failed to replicate, you’ll need to pay separate fees for the Batch Replication jobs.

Again, these charges differ depending on the region and number of objects you’re replicating. For most regions, you’ll have to pay:

- $0.25 per replication job.

- $1 per million objects processed.

How to Overcome S3 Replication’s Central Problems with Resilio Connect

As you can see, calculating S3 replication costs can be complex due to the number of variables you need to take into account. This often results in companies getting AWS bills that far exceed their estimations.

Additionally, S3 Replication often fails (or takes too long) due to bucket versioning, permission issues, and lots of other technical misconfigurations. When that happens, the complexity of AWS makes debugging very difficult, which can be a big issue for companies that rely on their data always being up-to-date in locations all over the world.

Fortunately, Resilio Connect can help you replicate data across AWS regions and services while overcoming these issues. Specifically, Resilio Platform can:

- Minimize unnecessary data movement and egress traffic (to minimize costs).

- Replicate all objects reliably, preserving data integrity end-to-end.

- Overcome latency and packet loss across all cloud regions, irrespective of distance.

- Overcome latency and packet loss between the user’s remote systems located anywhere and the S3 storage instance.

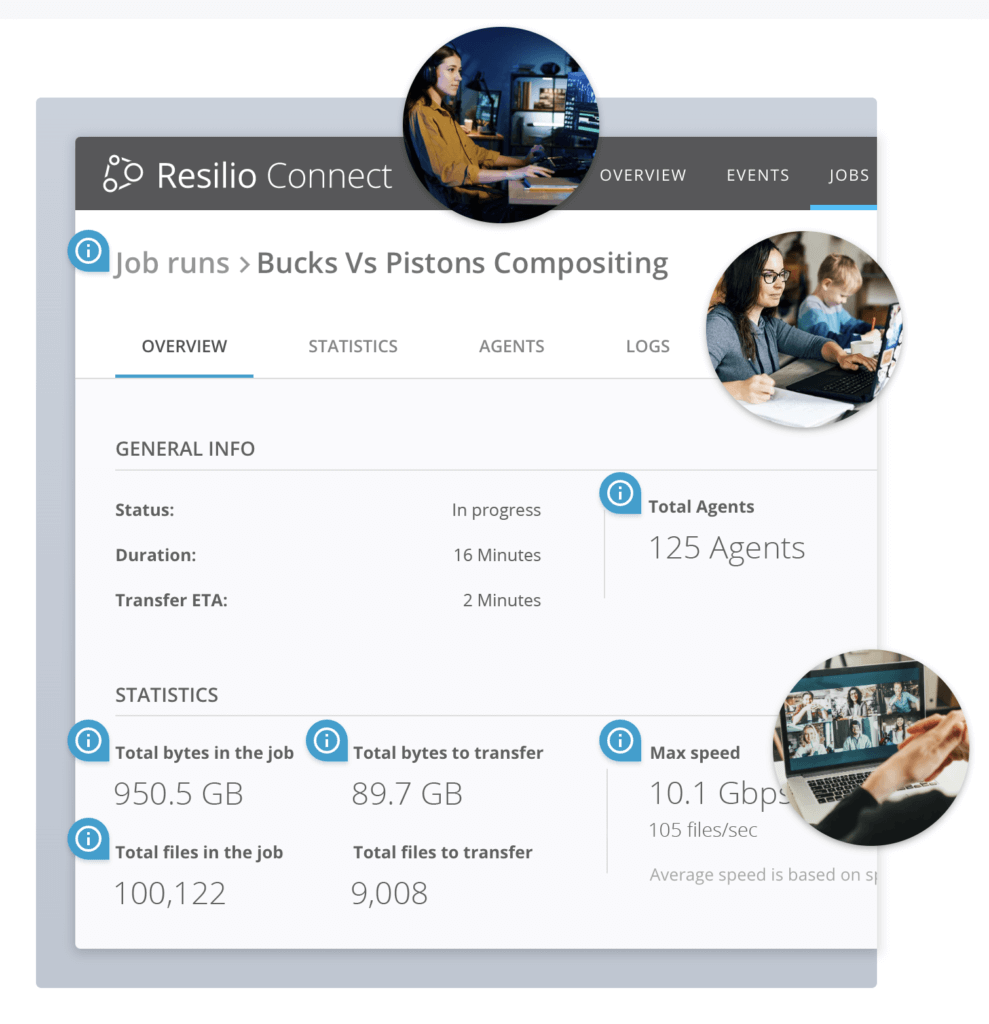

- Give you clear visibility and granular control over the replication process.

- Ensure you get near-instant replication speeds every time.

- Provide you with full replication flexibility in terms of regions, services, cloud providers, and more.

Transparent Selective Sync & Smart Cloud Routing: No More Unnecessary Data Movement and Egress Costs

As we said earlier, data transfer fees are a big contributor to most S3 replication bills. The more data you move across regions, the more you have to pay, so it’s essential to limit unnecessary data movements.

To help you do that, Resilio Platform gives you full control over how you move data to the cloud, within the cloud — within and across cloud regions — and from the cloud to reduce egress traffic.

For example, you can use our Transparent Selective Sync (TSS) feature to drive replication on demand. TSS lets you easily initiate replication only of the objects you’ve selected, which reduces the amount of data being replicated and as a result — lowers your costs.

Our Smart Routing capability also plays a big role in cost optimization. It lets you granularly rout (or pin) traffic to a given network. This means you can choose to keep all your traffic on the AWS network or replicate some objects to a remote edge network or office and keep traffic on a LAN (Local Area Network), instead of on a more expensive Wide Area Network (WAN).

Lastly, thanks to Resilio’s flexibility, you can store frequently accessed objects locally. These files don’t have to be continuously downloaded from the AWS cloud, which further reduces your costs.

Central Management Console: High Visibility and Granular Replication Management

While AWS offers tons of storage and replication tools, its complexity makes it difficult to replicate data in an efficient and cost-effective way.

You can choose between a bunch of replication options besides S3 Replication, like AWS DataSync and the S3 Copy Objects API. You also have to consider the factors we went over, as well as other technical minutia to ensure you’re not moving more data across regions than necessary.

And, when something goes wrong, you usually have to spend hours debugging by analyzing permissions, bucket ownership, Identity and Access Management (IAM) roles, and much more.

In contrast, Resilio Platform makes things simple by letting you control all replication jobs — regardless of the region, service, or even cloud provider — from a single Central Management Console.

You can use the console to adjust key replication aspects, including:

- Bandwidth by setting usage limits based on the time of day or day of the week. You can also create different schedules for different replication jobs and agent groups.

- Network by changing buffer size, packet size, and more to optimize performance.

- Storage stack by tailoring your data hashing, disk io threads, and file priorities to meet your specific needs.

- Functionality by using our REST API to control jobs, create groups, manage agents, report on data transfers, set up notifications, or script any other Resilio functionality.

In short, Resilio Connect’s simplicity drastically reduces the time organizations spend on setting up, managing, and debugging replication.

For example, Mixhits Radio is one of the companies that experienced massive time-savings thanks to the ability to manage everything from a single place:

“We have gone from spending 15 hours on average per week troubleshooting conflicts in the prior solution to spending no time at all with Resilio. We configure jobs once in the Resilio Platform Management Console and never have to look at it again.” — Gary Hanna, CEO, Mixhits Radio

For more details on their use case and approach, check out the full Mixhits Radio case study.

P2P Architecture & WAN Optimization: Fast, Efficient, and Resilient Replication

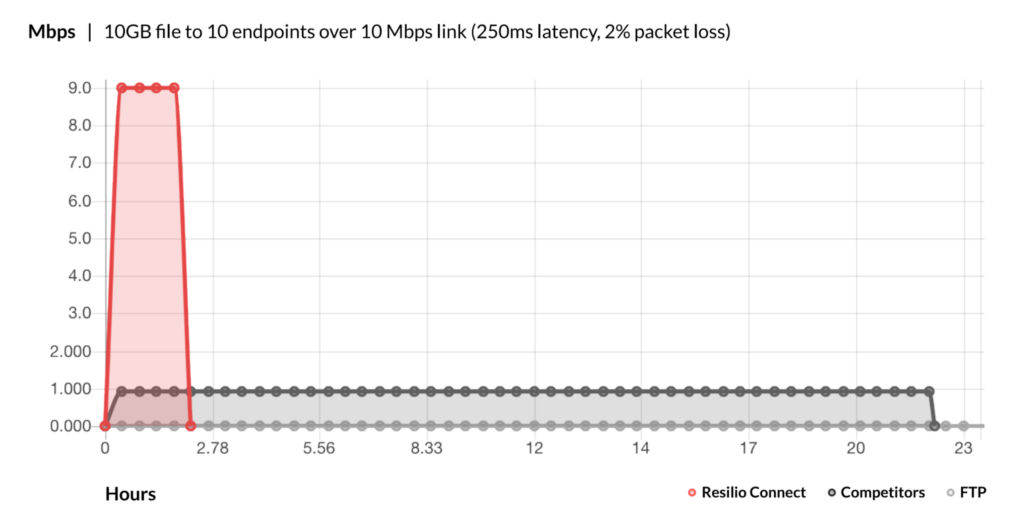

The underlying reasons for Resilio Connect’s superior performance are its unique P2P (peer-to-peer) transfer architecture and proprietary WAN (wide area network) optimization technology.

First, the P2P architecture makes use of every device in your network to replicate objects. This is in stark contrast to the two typical topologies used by most replication solutions (including those offered by AWS):

- Client-server replication, where one device acts as a hub that can receive objects from any other device. Conversely, the clients can only share data with the hub but not with each other, e.g., if Client 1 wants to replicate objects to the other servers, it must first send them to the hub that does the actual replication.

- “Follow-the-sun” replication, where replication only occurs from one device to the next, e.g., Device 1 must first replicate objects across Device 2, which must then replicate them to Device 3, and so on.

Both topologies create replication bottlenecks by limiting the process to only two devices at a time. They also have a single point of failure, so one device can easily slow down or stop the replication altogether.

Resilio’s P2P architecture avoids that by allowing all devices to share objects with each other.

Resilio Platform also uses file chunking to turn files into several chunks, which can be transferred independently from each other. This results in transfer speeds 3-10x faster than traditional replication solutions.

The P2P architecture and ability to turn files into several chunks make Resilio Platform one of the few solutions that can perform true bidirectional sync, including:

- One-to-one transfer.

- One-to-many transfer.

- Many-to-one transfer.

- N-way transfer.

Second, Resilio’s Zero Gravity Transport™ (ZGT) — our proprietary WAN optimization technology— helps you make the most out of expensive WAN networks.

WAN transfers and replication is an area where the majority of replication tools, including AWS’ solutions, struggle.

Most replication tools use the TCP/IP transfer protocol, which is great for local area networks (LANs) but not suitable for WAN transfers. TCP/IP treats packet loss — one of the defining characteristics of WANs — as a network congestion issue and lowers the transfer speeds as a response. However, these slowdowns are unnecessary, because packet loss isn’t a network congestion problem in WAN settings.

As a result, companies using these tools experience big replication delays. They also don’t make the most out of expensive WAN networks and struggle to synchronize their data across multiple sites and cloud providers.

Conversely, our ZGT protocol minimizes the impact of packet loss and high latency and speeds up transfers across all networks by:

- Utilizing a congestion control algorithm to calculate the ideal send rate and periodically probing the RTT (Round Trip Time) to inform the algorithm and maintain an optimal sending rate.

- Using interval acknowledgment for a group of packets with additional information about lost packets.

- Sending packets periodically with a fixed packet delay to create a uniform packet distribution time.

- Retransmitting lost packets once per RTT to decrease unnecessary transmissions.

We have a dedicated WAN optimization whitepaper where you can learn more about our approach and the benefits of the ZGT protocol.

No Single Point of Failure: Reliability and Fault Tolerance

Resilio’s P2P architecture doesn’t have a single point of failure.

If one server fails, our software’s agents can always access data from other ones, which removes the reliability risks associated with the client-server and follow-the-sun topologies.

Resilio Platform can also:

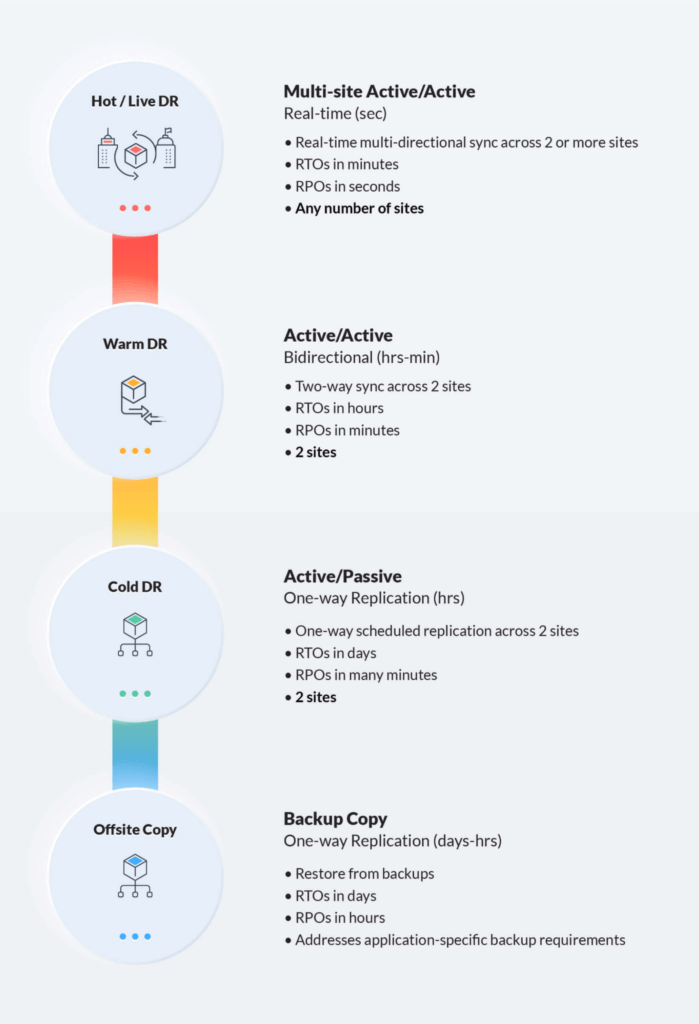

- Meet sub-five-second RPOs (Recovery Point Objectives) and RTOs (Recovery Time Objectives) within minutes of an outage by utilizing any network in your environment.

- Perform “checksum restarts” in the event of a network failure, so failed transfers continue where they left off.

- Use cryptographic file validation to guarantee that replicated files remain uncorrupted.

These capabilities, along with the P2P architecture, make Resilio an ideal solution for disaster recovery (DR) scenarios, including:

- Cold DR.

- Hot-site DR.

- Warm-site DR.

- Offsite copy.

For example, one of our clients develops software that’s used by 94% of Fortune 100 companies.

Before Resilio, they were using the Microsoft DFS replication service, and relying on backup methods like NDMP to manage and move files globally. This created lots of delays for their customers all over the world and resulted in additional challenges to their backup and restore strategy.

Thanks to Resilio, the company now has a single point of backup, which gives them granular control at the file level and massively reduces their recovery times.

“Our file recovery times were substantially decreased with Resilio. Our recovery time took 3 or 4 days using DFSR and we could not meet our RTOs. Using Resilio Connect, we can restore a single file — in some cases in less than a minute — using the exact same IT infrastructure (servers, storage, and DFS namespace).”

You can check out the complete case study for more details on their challenges and Resilio’s value to the company.

Cloud-Agnostic Software: Versatility and Ease of Setup

As AWS solutions, S3 and S3 Replication are built to keep users within the AWS ecosystem. In contrast, Resilio Platform is a cloud-agnostic software that builds on open formats, open standards, and an open, multi-cloud architecture.

This makes Resilio incredibly flexible in terms of setup, as you can deploy it on your existing infrastructure, including:

- On-prem, in the cloud, and in hybrid cloud scenarios.

- On industry-standard desktops, servers, storage, and networks.

- Cross-platform on Linux, Ubuntu, OS X, Android, iOS, and Microsoft Windows servers.

- VMware, Citrix, and other virtualization platforms.

The entire deployment process is very fast, allowing companies to set up our software and begin replicating in as little as two hours. Plus, there’s no need to purchase any new hardware. You can just use Resilio Platform with the storage your team already uses, like DAS, NAS, or SAN.

This flexibility also lets you avoid vendor lock-in and replicate data across any global region, cloud service, on-prem storage, and cloud provider (including AWS, Azure, GCP, Wasabi, Backblaze, and any other S3-compatible cloud storage).

Put simply, Resilio gives you the ability to pivot at a moment’s notice by tearing down projects in one cloud, starting them in another, and optimizing your cost structure as needed.

AES 256 Encryption: Enterprise-Grade Security

Resilio Platform uses a variety of features to ensure your data is always safe. These features, which are all reviewed by 3rd-party security experts, include:

- AES 256 encryption for data at rest and in transit.

- One-time session encryption keys for protecting sensitive data.

- Mutual authentication keys, which ensure your data only gets delivered to its designated endpoints.

- Cryptographic data validation, which guarantees data arrives at its destination uncorrupted.

Get Fast and Reliable Replication That Just Works with Resilio Connect

Resilio Platform is the ideal solution for organizations that need fast, reliable, and secure object replication across AWS regions (and other cloud providers). Its P2P transfer architecture and WAN optimization technology result in:

- Fast, real-time replication: Resilio has the fastest transfer speeds in the industry — 10+ Gbps per endpoint, which is up to 10 times faster than traditional solutions.

- Optimized WAN transfers: Resilio can transfer data over high-latency, unreliable internet connections and make the most out of expensive WANs.

- Resilience and reliability: Resilio’s P2P architecture means that there is no single point of failure, making it ideal for disaster recovery scenarios.

- Flexibility: You can set up Resilio on your existing infrastructure and use it with various AWS services, other cloud providers, and on-prem environments.

To learn more about how Resilio can help you replicate data quickly and reliably, schedule a demo with our team.