IoT (Internet of Things) data ingestion is the first step of a complete IoT data pipeline. However, most IoT ingest solutions can only ingest small data payloads. AWS IoT Core, for example, has a maximum MQTT data block size of 128 KB, and it rejects publish and connect requests larger than this.

But what happens when you need to ingest larger payloads containing semi-structured or unstructured data sets — such as large files? Some use cases where this may be necessary include ingesting medical images to the cloud or ingesting binary files generated from industrial machines to the cloud.

Ingestion is crucial as it enables companies to:

- Optimize IoT device operations, downtime, and key performance metrics.

- Connect their IoT applications to other services, such as monitoring solutions.

- Collect data that they can aggregate and process to improve products, services, and operations.

While potential workarounds allow you to ingest large payloads (such as using AWS S3 and AWS IoT Core together), these workarounds can be challenging to set up and manage. And a much simpler solution is explicitly designed to ingest large IoT payloads: Resilio Connect.

Want to see how Resilio can quickly and securely ingest your large IoT payloads? Schedule a demo with our team.

In this article, we’ll explore 6 key challenges organizations struggle with when ingesting large payloads of IoT data. We’ll describe how our edge ingest solution, built on Resilio Connect, resolves each challenge providing the most reliable and efficient solution for ingesting large and unstructured IoT datasets.

Resilio Platform is an agent-based file ingest solution that uses a distributed peer-to-peer (P2P) replication architecture and proprietary WAN acceleration protocol to easily ingest, distribute, and sync data (with no limit on file sizes) across thousands of endpoints.

Resilio can handle the challenges of large IoT data ingestion thanks to its:

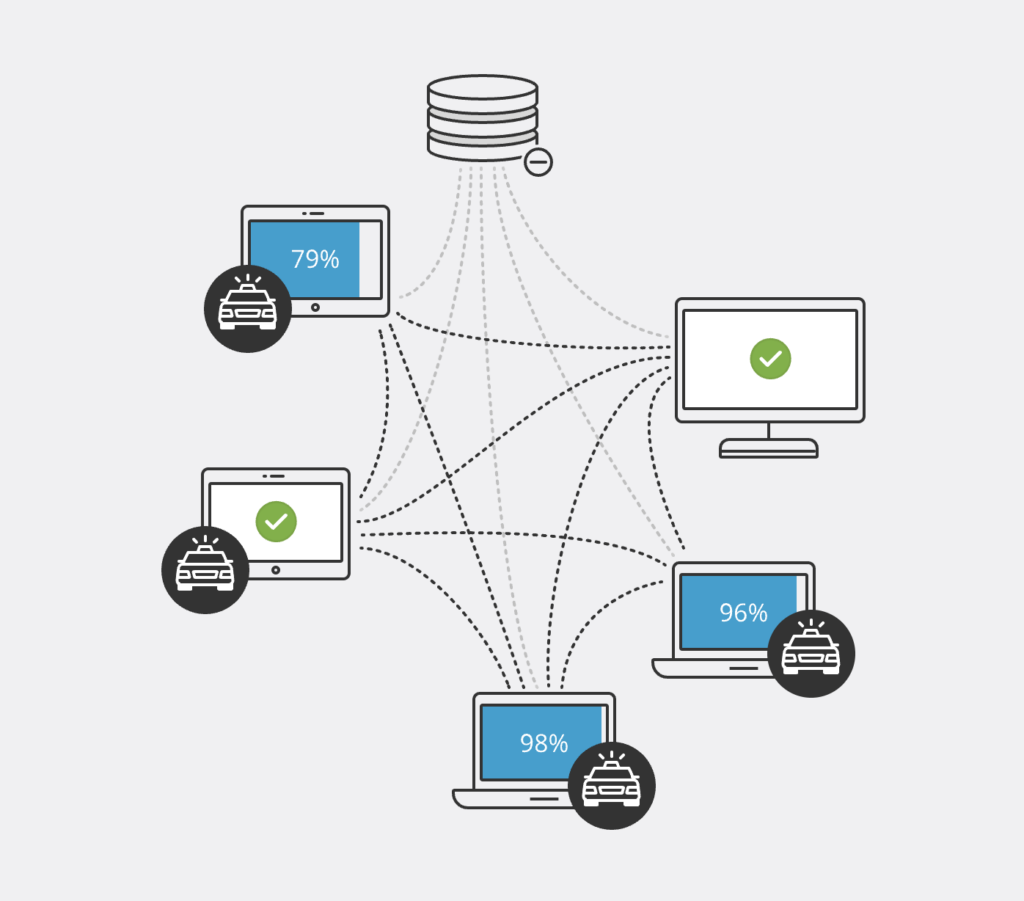

- P2P replication architecture: Resilio uses a P2P replication architecture that converts your infrastructure into a distributed mesh network. It enables all endpoints to share data directly with each other simultaneously — allowing you to leverage every endpoint to enhance scalability, ingest files of any size/type/number, reduce the load on your network and endpoints, improve availability and reliability, and more.

- WAN optimization: Resilio uses a proprietary WAN acceleration protocol that leverages low bandwidth and intermittent connections and optimizes transfers over any network. ZGT enables organizations located anywhere — including at sea and in countries with underdeveloped network infrastructure — to sync data reliably and in predictable time frames.

- Reliability and fault-tolerance: Resilio includes features that ensure bulletproof data replication in all situations, such as checkpoint restarts, cryptographic integrity validation, and more. In any situation, you can feel confident that your data will always reach its destination.

- Centralized management and automation: Resilio provides granular control over your entire replication environment from one centralized location. And you can automate replication jobs and troubleshooting processes to minimize human intervention.

- Flexibility: You can use Resilio to ingest, distribute, and sync data with IoT devices and any other type of device. It runs on just about any operating system and cloud storage service. You can easily install Resilio on your existing infrastructure and begin replicating data in as little as 2 hours. It can be easily integrated into IoT applications and workflows through scripting automation and a comprehensive API.

- Security: Resilio includes native security features that protect your data at rest and in transit. These security features address the specific challenges of secure IoT data ingestion, preventing hackers from eavesdropping or accessing your cloud. And its new proxy server feature gives IT teams more control over networks, security, and traffic.

Organizations such as McDonald’s, Shifo, Lindblad Expeditions, VoiceBase, Mercedes-Benz, and more rely on Resilio for reliable, automated, and scalable data replication. To learn more about how Resilio can enhance your IoT data ingestion pipeline, schedule a demo with our team.

Challenge 1: Scaling IoT Environments Leads to Load-Balancing and Speed Issues

IoT data ingestion requires ingesting data from many (sometimes millions) of globally distributed devices and applications. Ingestions can occur in batches periodically or via real-time data replication — in each case, sending large data streams over the network on a constant basis.

Most data ingestion solutions utilize a point-to-point replication architecture configured in a hub-and-spoke model. In this model, all data replication to and from IoT devices must go through a centralized hub server.

This places great strain on your network — which can cause network congestion and replication failures that interrupt business operations — and forces organizations to invest a lot of money into expensive backup servers, scaling infrastructure, and network configurations. Troubleshooting these issues can consume most of your IT department’s time.

And as we stated earlier, most IoT data ingestion solutions can only ingest small payloads and place a limit on the data block size of ingested data — making them poorly suited to large edge and IoT data ingestion.

But Resilio Platform is built on a distributed P2P replication architecture that converts your IoT infrastructure into a distributed mesh network. And it can ingest payloads of any size.

The solution works by either installing Resilio agents directly on each endpoint or on a remote edge caching device used for data collection. Each agent can be isolated or configured to share data with other agents.

In some edge data collection scenarios (many-to-one transfer), each endpoint can operate independently to collect and transfer files back to a central core or cloud storage location. Either way, Resilio gives you the flexibility to rapidly acquire, transfer, and collect files in a variety of ways — based on your ingestion needs.

Resilio’s P2P architecture makes it:

Blazing Fast

Every endpoint in a P2P environment can work together to share files, distributing the network and CPU load across your entire environment and allowing replication to reach blazing-fast speeds. This is accomplished primarily through two Resilio features:

- File chunking

- Horizontal scale-out replication

File chunking is a process where files are broken into multiple chunks that can transfer independently of each other. Each endpoint in your environment can work together to distribute or sync file chunks, enabling Resilio to synchronize your environment 3–10x faster than point-to-point solutions.

For example, imagine you need to sync a large video file across your environment. That file can be broken down into five chunks. Endpoint 1 can send the first chunk to Endpoint 2. Even before it receives the rest of the file, Endpoint 2 can begin sharing the first chunk with another endpoint. As soon as the next Endpoint receives the chunk, it can also share it immediately. Soon, every endpoint will be sharing file chunks concurrently.

Resilio’s P2P architecture allows you to create different types of sync jobs, such as:

- One-way sync: Backup data from one endpoint to another.

- Two-way sync: Keep two endpoints synchronized.

- One-to-many sync: Distribute data and updates across a fleet of vehicles or other edge and IoT devices.

- Many-to-one sync: Consolidate data from a fleet of edge and IoT devices (for example, we demonstrate how to create a consolidation job that ingests footage from CCTV cameras onto a remote server here).

- N-way sync: Sync data across hundreds or thousands of endpoints concurrently.

P2P replication provides fast, reliable ingestion of data from fleets of vehicles in remote locations with poor network connectivity. This architecture leverages every client in your network, reduces the load on your network, improves availability, and provides high-performance replication.

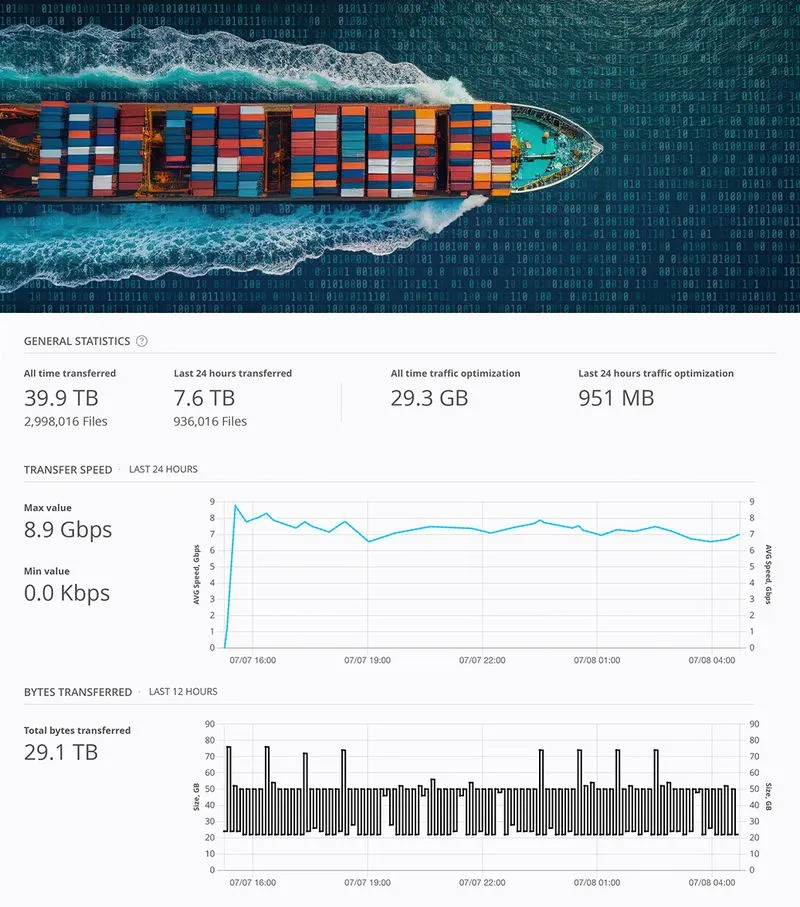

For example, Ross Maritime is a shipping agency that uses Resilio to ingest data from their fleet of sea vessels. Many of their ships spend long durations away from high-bandwidth communication channels but are occasionally in range of their other ships. With Resilio’s P2P replication architecture, they can utilize vessel-to-vessel communication to quickly transfer critical data from ship to ship to shore in a “store forward fashion.”

Organically Scalable

With traditional point-to-point solutions, every endpoint (IoT device) you add to your system places more strain on the hub server and network, eventually forcing you to invest in more servers and network infrastructure. The costs can quickly add up and managing these large environments is frustrating and time-consuming.

But with Resilio, every endpoint in your system can share data directly with each other and distribute network/resource consumption across your environment. This enables Resilio to scale organically — i.e., adding more endpoints only increases replication speed and available resources.

Resilio can easily scale to support environments of any size. It can sync:

- Files of any size: You can reliably acquire and collect images, video, and large text files of any size.

- Any type of file: Industries like energy, construction, media & entertainment, and gaming acquire images, 3D models, photogrammetry, telemetry data, raw data, and other data-intensive applications.

- Large numbers of files: Our engineers successfully synchronized 450+ million files in a single job.

- Environments of any size: You can quickly ingest files from as many endpoints as needed. Files can be obtained directly from endpoints without requiring an intermediate edge caching location. Optionally, Resilio can run on the edge caching locations and transfer and/or sync directly from each location to any other location.

Challenge 2: Operating in Areas with Poor Connectivity

IoT data can be ingested from many different devices, such as home monitoring devices, medical appliances, vehicles, cameras, drones, and other smart devices. Oftentimes, these devices operate in remote locations with poor network connectivity — i.e., intermittent VSAT connection, consumer-grade cell connections, and internet broadband.

This creates several IoT data ingestion problems:

- Speed: In time-sensitive situations, slow networks or poor connectivity that cuts in and out can impede an organization’s ability to obtain the data on time.

- Volume: The data being ingested can consist of large files or many small files sent periodically or in real-time. Poor connectivity can lead to network congestion.

- Congestion: Networks can get blocked up for many reasons. For example, one of our clients, Northern Marine Group, operates in extremely remote conditions, leading to frequent replication failures and timeouts. New requests and retransmitted data would pile up, delaying data transmission and congesting networks — a problem that spiraled and created even more delays and congestion.

Resilio Platform overcomes these network issues by utilizing a proprietary WAN acceleration transfer protocol known as Zero Gravity Transport™ (ZGT). ZGT intelligently analyzes the underlying network conditions, including latency, packet loss, and throughput over time and adjusts to these conditions dynamically in order to maximize bandwidth utilization.

This means that Resilio provides complete predictability of transfer speeds across any type of connection, irrespective of latency and packet loss.

Put simply, ZGT optimizes any type of network connection (such as VSAT, Wi-Fi, 3G/4G/5G cell, and any IP connection) to efficiently replicate data at the fastest speeds possible using:

- Congestion control: ZGT uses a congestion control algorithm that constantly probes the Round Trip Time (RTT) of a network to identify and maintain the ideal data packet send rate.

- Interval acknowledgments: Rather than sending an acknowledgment for each received packet, acknowledgments are sent for groups of packets to minimize traffic over the network.

- Delayed retransmission: Rather than retransmitting lost packets after each acknowledgment, ZGT retransmits lost packets in groups once per RTT to reduce unnecessary retransmissions.

ZGT enables Resilio to replicate data over any network 100x faster than competing solutions. In fact, Resilio engineers successfully transferred a 1 TB payload across Azure regions in 90 seconds.

Because of ZGT’s efficiency, Resilio works well in environments with underdeveloped networks. Our client Shifo Foundation provides healthcare services in low-connectivity areas such as Uganda and uses Resilio Platform to reliably ingest PDF files over 3G/4G and dial-up internet connections.

No matter where you operate or where your IoT devices are located, you can rest easy knowing that their data will be transmitted in a predictable and timely manner.

Challenge 3: Unreliable Replication

IoT data ingestion can be hindered by network issues, device failures, and replication failures/errors. But more often than not, organizations need their data quickly and reliably in order to operate, and such errors can create costly interruptions to business operations.

As we already discussed, point-to-point solutions struggle with the centralized nature of how they replicate data. This issue is compounded when collecting IoT data from areas with poor network quality, as IoT devices are limited to transmitting over just one network.

Resilio’s distributed architecture overcomes this challenge. You can mesh IoT devices in order to distribute the network load and speed up transfers.

And Resilio also has several other features that enable it to reliably replicate data in any scenario:

- Checkpoint restarts: Resilio retries all transfers until they’re complete. And if a transfer is interrupted, Resilio will perform a checkpoint restart to resume the transfer at the point of failure.

- No single point of failure: Resilio’s P2P architecture eliminates single points of failure. If any network or device goes down in your environment, the necessary files or services can be retrieved from any other device.

- Dynamic rerouting: Resilio can route around outages and downed networks to find the optimal path for delivering data. No matter what, your data will always reach its target.

- Data caching and offline access: Resilio enables you to cache any files you want on local storage. If a network goes down, you can still access the data locally and share it over LANs. When the connection resumes, Resilio will automatically sync the data. And Resilio also works for air-gap applications.

- Network prioritization: You can configure Resilio to make use of multiple network connections (such as satellite, Wi-Fi, cell, and more) and prioritize which type of connection you want Resilio to use. And if one network goes down, Resilio can dynamically switch to other available networks to ensure you always stay connected.

- Cryptographic integrity validation: Data can get corrupted during transfers, especially over WANs. Resilio uses cryptographic integrity validation to ensure your files reach their destination intact and uncorrupted.

Challenge 4: Managing Large Replication Environments

In a typical IoT data ingestion scenario, data is ingested from many geographically distributed devices into a data lake, data warehouse, or storage container. Managing data replication and troubleshooting errors across all of these devices can be difficult and time-consuming.

Resilio Platform provides a user-friendly graphic interface that makes managing and automating data replication easy.

Through Resilio’s Management Console, you can:

- Centrally manage every endpoint: You can control every endpoint in your environment from one location.

- Configure bandwidth profiles: You can control bandwidth allocation at each endpoint. You can even create bandwidth profiles that control how much bandwidth is allocated to each endpoint at certain times of the day and on certain days of the week. This allows you to adjust resource utilization in order to maximize efficiency and work around constraints during peak hours. And you can allocate a fraction of your available physical WAN bandwidth to specific endpoints and guarantee full utilization of that allocation.

- Adjust replication parameters: You can control how replication occurs by adjusting data hashing, disk I/O, packet size, and more.

- Control file priorities: You can prioritize replication jobs to ensure replication occurs in alignment with your business needs and network constraints.

- Monitor jobs: You can view job performance metrics, configure notifications (sent to email or Webhooks), view job status, visualize and monitor transfers, and more.

- Review logs: You can review a history of all executed jobs for security and compliance. Logs are stored in JSON format. And Resilio integrates with event processing solutions such as Splunk, LogRhythm, Loggly, Grafana, and more.

- Automate troubleshooting: You can automate troubleshooting processes — such as file conflict resolution — to minimize data management time and the need for human intervention.

You can also use Resilio’s powerful REST API to script any type of functionality your job requires and automate transfers. The API enables you to create three types of scripting triggers for replication jobs:

- Before a job starts

- After a transfer is complete

- After all transfers are complete

This enables you to automate IoT data ingestion and have that data automatically sent to other solutions for processing, analysis, visualization, and more.

Challenge 5: Ingesting Data in Complex Environments (i.e., Using Different Types of Devices, Tools, and Storage)

Most organizations need a replication solution that’s flexible enough to handle their replication needs in all scenarios. Some might need to ingest IoT data into multiple cloud storage services for disaster recovery or regulatory compliance. Others might need to ingest data from other types of data sources in addition to IoT devices.

In a typical IoT ingestion process, before ingested data can be utilized, it must go through filtering (i.e., erroneous or duplicated data removed), processing, enrichment, and analysis. This requires transfer to other data integration solutions, such as AWS (Amazon Web Services) Greengrass Stream Manager, AWS IoT Analytics, AWS IoT Core, AWS IoT Sitewise machine learning tools, and more.

No matter your scenario, Resilio Platform is flexible enough to handle your replication needs.

Resilio supports just about any:

- Device: You can install Resilio agents on IoT devices, desktops, servers, laptops, NAS/DAS/SAN devices, and virtual machines.

- Cloud storage service: Resilio works with just about any cloud storage provider, such as AWS, GCP, Azure, Wasabi, MinIO, Backblaze, and more.

- Operating system: Resilio is compatible with Windows, Linux, MacOS, Ubuntu, Unix, FreeBSD, OpenBSD, and more.

Resilio allows the shipping agency Ross Maritime to sync data between the Linux server in their main office, the Android devices that their crews use, and the Windows servers at each port.

Resilio also partners with organizations such as NetApp, making it easy to integrate with their IoT data processing and utilization workflows.

And Resilio integrates with many solutions that are part of an IoT data workflow. For example, you can integrate with Grafana for anomaly detection and to create automatic alerts that detect performance issues on your devices, industrial machines, etc.

Because of its flexibility, Resilio can be utilized in a variety of different IoT and edge replication use cases, such as:

- Fleet/vehicle ingestion: You can ingest data from 100s of vehicles when they come into network range. Agents on vehicles can be meshed together to create a distributed networking environment for all.

- Drones: Resilio reliably moves big data (such as video files) from your drone fleet, automatically offloading when in range of your Wi-Fi-enabled base.

- IoT devices: Resilio’s organic scalability makes it the best solution for collecting data from a large ecosystem of IoT devices. And it helps you avoid costly and embarrassing security vulnerabilities that otherwise expose your customers waiting on the device update cycle.

- Industrial Sensors: Resilio’s network optimization features enable it to operate efficiently in areas with intermittent connectivity, making it perfect for industrial applications that operate in areas with unreliable networks.

- Retail: With Resilio, retail organizations operating in low/poor connectivity areas can avoid overloading limited connections. No more sending physical devices in the mail to update store or employee software.

- Body & dash cameras: Resilio can offload big video assets from thousands of cameras. And if a camera is called to duty before an offload is complete, Resilio can automatically perform a checksum restart to resume the transfer from the point of failure.

- Weather and climate data ingestion: You can use Resilio to ingest large data sets, such as staged forecasts, meteorological models, and more.

- Extreme edge: You can collect real-time data, log files, and bulk files from locations at the extreme edge, such as mines, oil fields, pipelines, at sea, and more.

- Update deployment: You can also use Resilio to quickly and reliably deploy updates to your servers and applications. Our client VoiceBase uses Resilio to distribute software updates 88% faster than their previous solution.

Challenge 6: Keeping Data Secure

While security is always important when transferring data, it becomes even more important when ingesting IoT data because IoT ecosystems/devices are dependent on internet connectivity and have large attack surfaces.

Because IoT ecosystems can grow rapidly, security protocols must be implemented by design at the core of your IoT data pipeline. Incorporating security protocols later is more complex and challenging, requiring architectural and/or design changes.

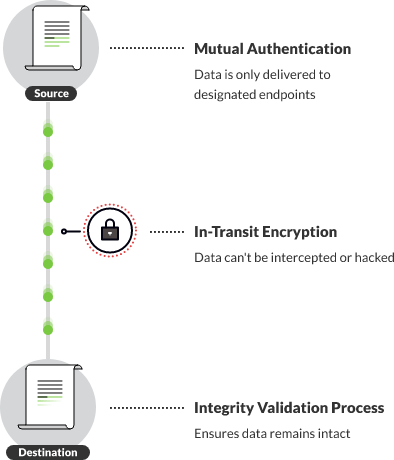

Many file replication and sync solutions don’t include native security features, forcing you to buy and use 3rd-party security solutions, such as VPNs. But Resilio Platform includes built-in security features that address key challenges of securing IoT data and place security at the core of your IoT pipeline. These features include:

- End-to-end encryption: Without secure data encryption, unauthorized users can eavesdrop on and manipulate your IoT traffic. Resilio encrypts data at rest and in transit using AES 256-bit encryption.

- Mutual authentication: IoT data ingestion requires a strong authentication mechanism on each device to prevent unauthorized access to the device, spoofing, and hackers gaining access to your cloud. Resilio requires every device with a Resilio agent to provide a security key before it can receive any data, ensuring your data is only delivered to approved, authenticated endpoints.

- Cryptographic integrity validation: As stated earlier, Resilio preserves file integrity with cryptographic validation.

- Proxy server: Resilio now includes proxy and port-forwarding options. This gives your IT team more control over network traffic and security, allowing you to control programmatic access of specific systems/devices, configure outbound connections, use a single IP address behind the corporate firewall, provide end-users with more control over where/how data is stored, control access to specific files/folders on your machines, and more.

- Permission controls: You can control who is allowed to access files and folders.

Use Resilio Platform for IoT Data Ingestion

Resilio Platform is the best solution for IoT data ingestion because of its:

- P2P replication: Resilio’s distributed peer-to-peer architecture eliminates centralized distribution and effectively turns every endpoint into a data center. This allows you to transfer data quickly, scale organically, and eliminate single points of failure.

- WAN optimization protocol: Resilio’s proprietary WAN acceleration protocol enables you to fully utilize the bandwidth of any type of network connection and transfer data in a predictable and timely manner.

- Fault-tolerant features: Resilio retries all transfers until they’re complete, resumes interrupted transfers at the point of failure, dynamically reroutes around outages, and more.

- Centralized management and automation: Resilio enables you to control and automate data ingestion from a single, centralized location. You can monitor replication jobs, control replication parameters, and script any type of functionality your job requires.

- Flexibility: Resilio supports just about any type of device, cloud storage service, and operating system. You can easily integrate Resilio with many tools you need for your IoT data pipeline.

- Native security: Resilio includes built-in security features that protect your data at rest and in transit.

Organizations such as McDonald’s, Shifo, Lindblad Expeditions, VoiceBase, Mercedes-Benz, and more rely on Resilio for reliable, automated, and scalable data replication. To learn more about how Resilio can enhance your IoT data ingestion pipeline, schedule a demo with our team.